9/14/2016 by Lee Byron

After over a year of being open sourced we're bringing GraphQL out of "technical preview" and relaunching graphql.org.

For us at Facebook, GraphQL isn't a new technology. GraphQL has been delivering data to mobile News Feed since 2012. Since then it's expanded to support the majority of the Facebook mobile product and evolved in the process.

Early last year when we first spoke publicly about GraphQL we received overwhelming demand to share more about this technology. That sparked an internal project to revisit GraphQL, make improvements, draft a specification, produce a reference implementation you could use to try it out, and build new versions of some of our favorite tools, like GraphiQL. We moved quickly, and released parts that were ready along the way.

Part of Facebook's open source philosophy is that we want to only open source what is ready for production. While it's true that we had been using GraphQL in production at Facebook for years, we knew that these newly released pieces had yet to be proven. We expected feedback. So we carefully released GraphQL as a "technical preview."

Exactly one year ago, we published graphql.org, with a formal announcement that GraphQL was open source and ready to be "technically previewed". Since then we've seen GraphQL implemented in many languages, and successfully adopted by other companies. That includes today's exciting announcement of the GitHub GraphQL API, the first major public API to use GraphQL.

In recognition of the fact that GraphQL is now being used in production by many companies, we're excited to remove the "technical preview" moniker. GraphQL is production ready.

We've also revamped this website, graphql.org, with clearer and more relevant content in response to some the most common questions we've received over the last year.

We think GraphQL can greatly simplify data needs for both client product developers and server-side engineers, regardless of what languages you're using in either environment, and we're excited to continue to improve GraphQL, support the already growing community, and see what we can build together.

5/5/2016 by Steven Luscher

Time and time again I hear the same aspiration from front-end web and mobile developers: they're eager to reap the developer efficiency gains offered by new technologies like Relay and GraphQL, but they have years of momentum behind their existing REST API. Without data that clearly demonstrates the benefits of switching, they find it hard to justify an additional investment in GraphQL infrastructure.

In this post I will outline a rapid, low-investment method that you can use to stand up a GraphQL endpoint atop an existing REST API, using JavaScript alone. No backend developers will be harmed during the making of this blog post.

We're going to create a GraphQL schema – a type system that describes your universe of data – that wraps calls to your existing REST API. This schema will receive and resolve GraphQL queries all on the client side. This architecture features some inherent performance flaws, but is fast to implement and requires no server changes.

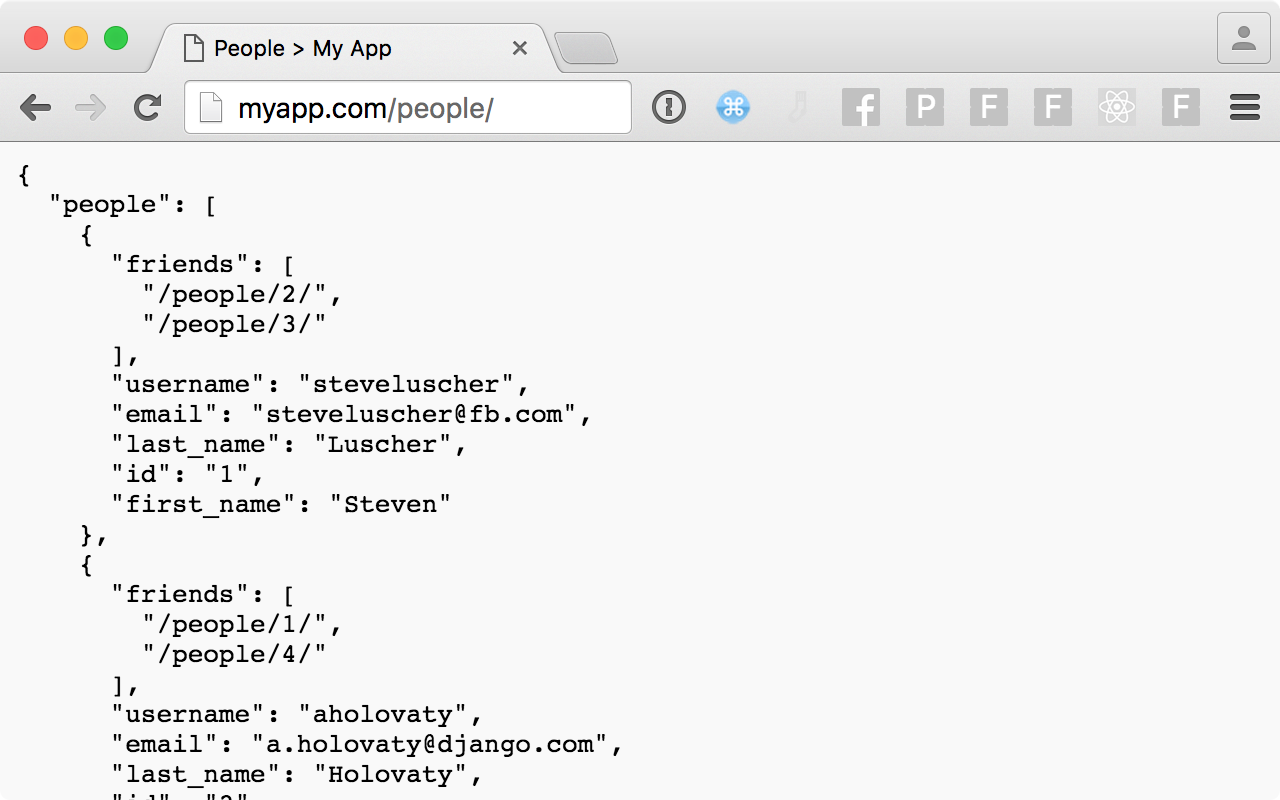

Imagine a REST API that exposes a /people/ endpoint through which you can browse Person models and their associated friends.

We will build a GraphQL schema that models people and their attributes (like first_name and email) as well as their association to other people through friendships.

First we'll need a set of schema building tools.

npm install --save graphqlUltimately we will want to export a GraphQLSchema that we can use to resolve queries.

import { GraphQLSchema } from 'graphql'; export default new GraphQLSchema({ query: QueryType, });

At the root of all GraphQL schemas is a type called query whose definition we provide, and have specified here as QueryType. Let's build QueryType now – a type on which we will define all the possible things one might want to fetch.

To replicate all of the functionality of our REST API, let's expose two fields on QueryType:

allPeople field – analogous to /people/person(id: String) field – analogous to /people/{ID}/Each field will consist of a return type, optional argument definitions, and a JavaScript method that resolves the data being queried for.

import { GraphQLList, GraphQLObjectType, GraphQLString, } from 'graphql'; const QueryType = new GraphQLObjectType({ name: 'Query', description: 'The root of all... queries', fields: () => ({ allPeople: { type: new GraphQLList(PersonType), resolve: root => // Fetch the index of people from the REST API, }, person: { type: PersonType, args: { id: { type: GraphQLString }, }, resolve: (root, args) => // Fetch the person with ID `args.id`, }, }), });

Let's leave the resolvers as a sketch for now, and move on to defining PersonType.

import { GraphQLList, GraphQLObjectType, GraphQLString, } from 'graphql'; const PersonType = new GraphQLObjectType({ name: 'Person', description: 'Somebody that you used to know', fields: () => ({ firstName: { type: GraphQLString, resolve: person => person.first_name, }, lastName: { type: GraphQLString, resolve: person => person.last_name, }, email: {type: GraphQLString}, id: {type: GraphQLString}, username: {type: GraphQLString}, friends: { type: new GraphQLList(PersonType), resolve: person => // Fetch the friends with the URLs `person.friends`, }, }), });

Note two things about the definition of PersonType. Firstly, we have not supplied a resolver for email, id, or username. The default resolver simply accesses the property of the person object that has the same name as the field. This works everywhere except where the property names do not match the field name (eg. the field firstName does not match the first_name property of the response object from the REST API) or where accessing the property would not yield the object that we want (eg. we want a list of person objects for the friends field, not a list of URLs).

Now, let's write resolvers that fetch people from the REST API. Because we need to load from the network, we won't be able to return a value right away. Luckily for us, resolve() can return either a value or a Promise for a value. We're going to take advantage of this to fire off an HTTP request to the REST API that eventually resolves to a JavaScript object that conforms to PersonType.

And here we have it – a complete first-pass at the schema:

import { GraphQLList, GraphQLObjectType, GraphQLSchema, GraphQLString, } from 'graphql'; const BASE_URL = 'https://myapp.com/'; function fetchResponseByURL(relativeURL) { return fetch(`${BASE_URL}${relativeURL}`).then(res => res.json()); } function fetchPeople() { return fetchResponseByURL('/people/').then(json => json.people); } function fetchPersonByURL(relativeURL) { return fetchResponseByURL(relativeURL).then(json => json.person); } const PersonType = new GraphQLObjectType({ /* ... */ fields: () => ({ /* ... */ friends: { type: new GraphQLList(PersonType), resolve: person => person.friends.map(getPersonByURL), }, }), }); const QueryType = new GraphQLObjectType({ /* ... */ fields: () => ({ allPeople: { type: new GraphQLList(PersonType), resolve: fetchPeople, }, person: { type: PersonType, args: { id: { type: GraphQLString }, }, resolve: (root, args) => fetchPersonByURL(`/people/${args.id}/`), }, }), }); export default new GraphQLSchema({ query: QueryType, });

Normally, Relay will send its GraphQL queries to a server over HTTP. We can inject @taion's custom relay-local-schema network layer to resolve queries using the schema we just built. Put this code wherever it's guaranteed to be executed before you mount your Relay app.

npm install --save relay-local-schema

import RelayLocalSchema from 'relay-local-schema'; import schema from './schema'; Relay.injectNetworkLayer( new RelayLocalSchema.NetworkLayer({ schema }) );

And that's that. Relay will send all of its queries to your custom client-resident schema, which will in turn resolve them by making calls to your existing REST API.

The client-side REST API wrapper demonstrated above should help you get up and running quickly so that you can try out a Relay version of your app (or part of your app).

However, as we mentioned before, this architecture features some inherent performance flaws because of how GraphQL is still calling your underlying REST API which can be very network intensive. A good next step is to move the schema from the client side to the server side to minimize latency on the network and to give you more power to cache responses.

Take the next 10 minutes to watch me build a server side version of the GraphQL wrapper above using Node and Express.

The schema we developed above will work for Relay up until a certain point – the point at which you ask Relay to refetch data for records you've already downloaded. Relay's refetching subsystem relies on your GraphQL schema exposing a special field that can fetch any entity in your data universe by GUID. We call this the node interface.

To expose a node interface requires that you do two things: offer a node(id: String!) field at the root of the query, and switch all of your ids over to GUIDs (globally-unique ids).

The graphql-relay package contains some helper functions to make this easy to do.

npm install --save graphql-relay

First, let's change the id field of PersonType into a GUID. To do this, we'll use the globalIdField helper from graphql-relay.

import { globalIdField, } from 'graphql-relay'; const PersonType = new GraphQLObjectType({ name: 'Person', description: 'Somebody that you used to know', fields: () => ({ id: globalIdField('Person'), /* ... */ }), });

Behind the scenes globalIdField returns a field definition that resolves id to a GraphQLString by hashing together the typename 'Person' and the id returned by the REST API. We can later use fromGlobalId to convert the result of this field back into 'Person' and the REST API's id.

Another set of helpers from graphql-relay will give us a hand developing the node field. Your job is to supply the helper two functions:

import { fromGlobalId, nodeDefinitions, } from 'graphql-relay'; const { nodeInterface, nodeField } = nodeDefinitions( globalId => { const { type, id } = fromGlobalId(globalId); if (type === 'Person') { return fetchPersonByURL(`/people/${id}/`); } }, object => { if (object.hasOwnProperty('username')) { return 'Person'; } }, );

The object-to-typename resolver above is no marvel of engineering, but you get the idea.

Next, we simply need to add the nodeInterface and the nodeField to our schema. A complete example follows:

import { GraphQLList, GraphQLObjectType, GraphQLSchema, GraphQLString, } from 'graphql'; import { fromGlobalId, globalIdField, nodeDefinitions, } from 'graphql-relay'; const BASE_URL = 'https://myapp.com/'; function fetchResponseByURL(relativeURL) { return fetch(`${BASE_URL}${relativeURL}`).then(res => res.json()); } function fetchPeople() { return fetchResponseByURL('/people/').then(json => json.people); } function fetchPersonByURL(relativeURL) { return fetchResponseByURL(relativeURL).then(json => json.person); } const { nodeInterface, nodeField } = nodeDefinitions( globalId => { const { type, id } = fromGlobalId(globalId); if (type === 'Person') { return fetchPersonByURL(`/people/${id}/`); } }, object => { if (object.hasOwnProperty('username')) { return 'Person'; } }, ); const PersonType = new GraphQLObjectType({ name: 'Person', description: 'Somebody that you used to know', fields: () => ({ firstName: { type: GraphQLString, resolve: person => person.first_name, }, lastName: { type: GraphQLString, resolve: person => person.last_name, }, email: {type: GraphQLString}, id: globalIdField('Person'), username: {type: GraphQLString}, friends: { type: new GraphQLList(PersonType), resolve: person => person.friends.map(fetchPersonByURL), }, }), interfaces: [ nodeInterface ], }); const QueryType = new GraphQLObjectType({ name: 'Query', description: 'The root of all... queries', fields: () => ({ allPeople: { type: new GraphQLList(PersonType), resolve: fetchPeople, }, node: nodeField, person: { type: PersonType, args: { id: { type: GraphQLString }, }, resolve: (root, args) => fetchPersonByURL(`/people/${args.id}/`), }, }), }); export default new GraphQLSchema({ query: QueryType, });

Consider the following friends-of-friends-of-friends query:

query { person(id: "1") { firstName friends { firstName friends { firstName friends { firstName } } } } }

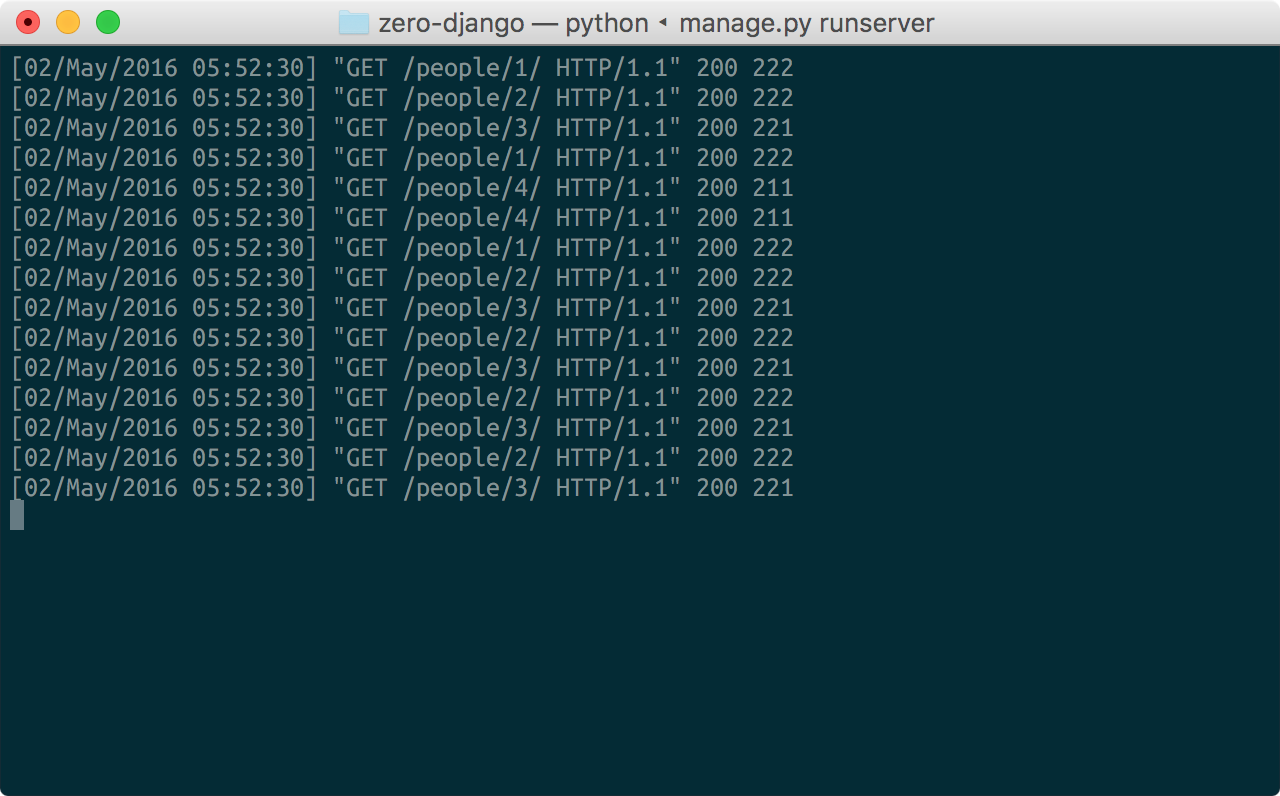

The schema we created above will generate multiple round trips to the REST API for the same data.

This is obviously something we would like to avoid! At the very least, we need a way to cache the result of these requests.

We created a library called DataLoader to help tame these sorts of queries.

npm install --save dataloaderAs a special note, make sure that your runtime offers native or polyfilled versions of Promise and Map. Read more at the DataLoader site.

To create a DataLoader you supply a method that can resolve a list of objects given a list of keys. In our example, the keys are URLs at which we access our REST API.

const personLoader = new DataLoader( urls => Promise.all(urls.map(getPersonByURL)) );

If this data loader sees a key more than once in its lifetime, it will return a memoized (cached) version of the response.

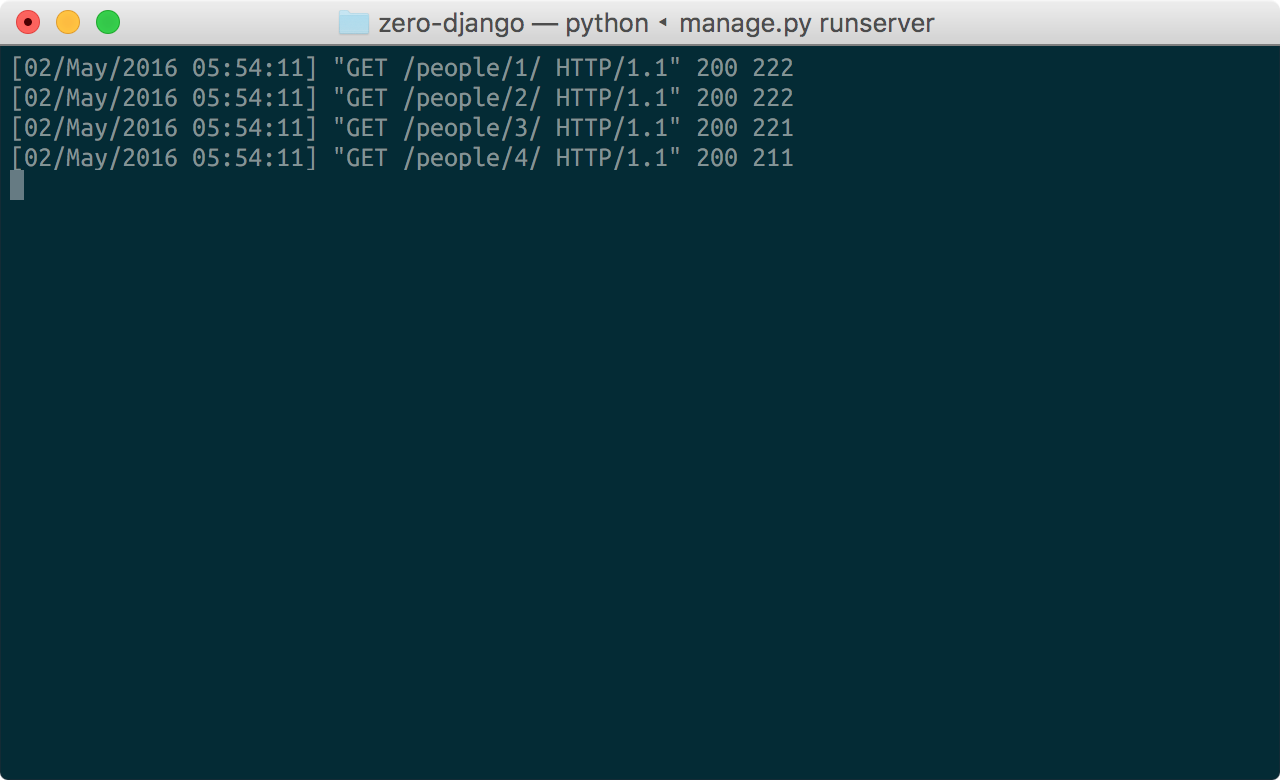

We can make use of the load() and loadMany() methods on personLoader to load URLs without fear of hitting the REST API more than once per URL. A complete example follows:

import DataLoader from 'dataloader'; import { GraphQLList, GraphQLObjectType, GraphQLSchema, GraphQLString, } from 'graphql'; import { fromGlobalId, globalIdField, nodeDefinitions, } from 'graphql-relay'; const BASE_URL = 'https://myapp.com/'; function fetchResponseByURL(relativeURL) { return fetch(`${BASE_URL}${relativeURL}`).then(res => res.json()); } function fetchPeople() { return fetchResponseByURL('/people/').then(json => json.people); } function fetchPersonByURL(relativeURL) { return fetchResponseByURL(relativeURL).then(json => json.person); } const personLoader = new DataLoader( urls => Promise.all(urls.map(fetchPersonByURL)) ); const { nodeInterface, nodeField } = nodeDefinitions( globalId => { const {type, id} = fromGlobalId(globalId); if (type === 'Person') { return personLoader.load(`/people/${id}/`); } }, object => { if (object.hasOwnProperty('username')) { return 'Person'; } }, ); const PersonType = new GraphQLObjectType({ name: 'Person', description: 'Somebody that you used to know', fields: () => ({ firstName: { type: GraphQLString, resolve: person => person.first_name, }, lastName: { type: GraphQLString, resolve: person => person.last_name, }, email: {type: GraphQLString}, id: globalIdField('Person'), username: {type: GraphQLString}, friends: { type: new GraphQLList(PersonType), resolve: person => personLoader.loadMany(person.friends), }, }), interfaces: [nodeInterface], }); const QueryType = new GraphQLObjectType({ name: 'Query', description: 'The root of all... queries', fields: () => ({ allPeople: { type: new GraphQLList(PersonType), resolve: fetchPeople, }, node: nodeField, person: { type: PersonType, args: { id: { type: GraphQLString }, }, resolve: (root, args) => personLoader.load(`/people/${args.id}/`), }, }), }); export default new GraphQLSchema({ query: QueryType, });

Now, our pathological query produces the following nicely de-duped set of requests to the REST API:

Consider that your REST API might already offer configuration offers that let you eagerly load associations. Maybe to load a person and all of their direct friends you might hit the URL /people/1/?include_friends. To take advantage of this in your GraphQL schema you will need the ability to develop a resolution plan based on the structure of the query itself (eg. whether the friends field is part of the query or not).

For those interested in the current thinking around advanced resolution strategies, keep an eye on pull request #304.

I hope that this demonstration has torn down some of the barriers between you and a functional GraphQL endpoint, and has inspired you to experiment with GraphQL and Relay on an existing project.

4/19/2016 by Jonas Helfer

This guest article contributed by Jonas Helfer, engineer at Meteor working on Apollo.

Do you think mocking your backend is always a tedious task? If you do, reading this post might change your mind…

Mocking is the practice of creating a fake version of a component, so that you can develop and test other parts of your application independently. Mocking your backend is a great way to quickly build a prototype of your frontend, and it lets you test your frontend without starting up any servers. API mocking is so useful that a quick Google search will turn up dozens of expensive products and services that promise to help you.

Sadly, I think none of the solutions out there make it as easy as it should be. As it turns out, that’s because they’ve been trying to do it with the wrong technology!

Editor’s note : The concepts in this post are accurate, but some the code samples don’t demonstrate new usage patterns. After reading, consult the graphql-tools docs to see how to use mocking today.

Mocking the data a backend would return is very useful for two main reasons:

If mocking your backend API has such clear benefits, why doesn’t everyone do it? I think it's because mocking often seems like too much trouble to be worth it.

Let’s say your backend is some REST API that is called over HTTP from the browser. You have someone working on the backend, and someone else working on the frontend. The backend code actually determines the shape of the data returned for each REST endpoint, but mocking has to be done in the frontend code. That means the mocking code will break every time the backend changes, unless both are changed at the same time. What’s worse, if you’re doing your frontend testing against a mock backend that is not up to date with your backend, your tests may pass, but your actual frontend won’t work.

Rather than having to keep more dependencies up to date, the easy option is to just not mock the REST API, or have the backend be in charge of mocking itself, just so it’s all in one place. That may be easier, but it will also slow you down.

The other reason I often hear for why people don’t mock the backend in their project is because it takes time to set up: first you have to include extra logic in your data fetching layer that lets you turn mocking on and off, and second you have to actually describe what that mock data should look like. For any non-trivial API that requires a lot of tedious work.

Both of these reasons for why mocking backends is hard are actually due to the same underlying reason: there is no standard REST API description in machine-consumable format and contains all the information necessary for mocking and can be used by both the backend and the frontend. There are some API description standards, like Swagger, but they don’t contain all of the information you need, and can be cumbersome to write and maintain. Unless you want to pay for a service or a product — and maybe even then — mocking is a lot of work.

Actually, I should say mocking used to be a lot of work, because a new technology is changing the way we think of APIs: GraphQL.

GraphQL makes mocking easy, because every GraphQL backend comes with a static type system. The types can be shared between your backend and your frontend, and they contain all of the information necessary to make mocking incredibly fast and convenient. With GraphQL, there is no excuse to not mock your backend for development or testing.

Here’s how easy it is to create a mocked backend that will accept any valid GraphQL query with the GraphQL mocking tool we are building as part of our new GraphQL server toolkit:

Every GraphQL server needs a schema, so it’s not extra code you need to write just for mocking. And the query is the one your component already uses for fetching data, so that’s also not code you write just for mocking. Not counting the import statement, it only took us one line of code to mock the entire backend!

Put that in contrast to most REST APIs out there, where mocking means parsing a URL and returning data in a custom shape for each endpoint. It takes dozens of lines to mock a single endpoint that returns some realistic-looking data. With GraphQL, the shape is encoded in the query, and together with the schema we have enough information to mock the server with a single line of code.

Did I mention that this one line is all you need to return mock data for any valid GraphQL query you could send? Not just some valid query, any valid query! Pretty cool, right?

In the example above, the mock server will return completely random IDs and strings every time you query it. When you’ve just started building your app and only want to see what your UI code looks like in different states, that’s probably good enough, but as you start to fine-tune your layout, or want to use the mock server to test your component’s logic, you’ll probably need more realistic data.

Luckily, this takes only a little more effort: customization of mock data is really where the Apollo mocking tool shines, because it lets you customize virtually everything about the mock data that it returns.

It lets you do all of the following and more:

For each type and each field you can provide a function that will be called on to generate mock data. Mock functions on fields have precedence over mock functions on types, but they work together really nicely: The field mock functions only need to describe the properties of the objects that matter to them, type mock functions will fill in the rest.

The mock functions are actually just GraphQL resolve functions in disguise. What that means is that your mocking can do anything that you could do inside a GraphQL resolve function. If you wanted, you could write your entire backend with it. I’m not saying you should, but you could.

I think the real power of this tool is that while it allows almost arbitrarily complex customization, you can get started really quickly and increase the sophistication of your mocks in tiny steps whenever you need it. Each step is so simple that it will feel like a breeze.

But enough talking, here’s a complete example:

To see the example in action and see what output it generates, head over to the live demo try running some queries!

If you want to fiddle around with the example, just click the "Download" button in the Launchpad UI. If you’re curious about how it works or want to see what other tools we’re building for GraphQL, then head over to apollostack/graphql-tools.

Pretty cool, right? All of that becomes possible by using a type system. And that’s only just the beginning — we‘re working on bridging the gap between mocking and the real thing so that your mock server can gradually turn into your real server as you add more functionality to it.

This post was originally published on the Apollo Blog. We publish one or two posts every week, about the stuff we’re working on and thinking about.

10/16/2015 by Dan Schafer and Laney Kuenzel

When we announced and open-sourced GraphQL and Relay this year, we described how they can be used to perform reads with queries, and to perform writes with mutations. However, oftentimes clients want to get pushed updates from the server when data they care about changes. To support that, we’ve introduced a third operation into the GraphQL specification: subscription.

The approach that we’ve taken to subscriptions parallels that of mutations; just as the list of mutations that the server supports describes all of the actions that a client can take, the list of subscriptions that the server supports describes all of the events that it can subscribe to. Just as a client can tell the server what data to refetch after it performs a mutation with a GraphQL selection, the client can tell the server what data it wants to be pushed with the subscription with a GraphQL selection.

For example, in the Facebook schema, we have a mutation field named storyLike, that clients can use to like a post. The client might want to refetch the like count, as well as the like sentence (“Dan and 3 others like this”. We do this translation on the server because of the complexity of that translation in various languages). To do so, they would issue the following mutation:

mutation StoryLikeMutation($input: StoryLikeInput) { storyLike(input: $input) { story { likers { count } likeSentence { text } } } }

But when you’re looking at a post, you also want to get pushed an update whenever someone else likes the post! That’s where subscriptions come in; the Facebook schema has a subscription field named storyLikeSubscribe that allows the client to get pushed data anytime someone likes or unlikes that story! The client would create a subscription like this:

subscription StoryLikeSubscription($input: StoryLikeSubscribeInput) { storyLikeSubscribe(input: $input) { story { likers { count } likeSentence { text } } } }

The client would then send this subscription to the server, along with the value for the $input variable, which would contain information like the story ID to which we are subscribing:

input StoryLikeSubscribeInput {

storyId: string

clientSubscriptionId: string

}At Facebook, we send this query to the server at build time to generate a unique ID for it, then subscribe to a special MQTT topic with the subscription ID in it, but many different subscription mechanisms could be used here.

On the server, we then trigger this subscription every time someone likes a post. If all of our clients were using GraphQL, we could put this hook in the GraphQL mutation; since we have non-GraphQL clients as well, we put the hook in a layer below the GraphQL mutation to ensure it always fires.

Notably, this approach requires the client to subscribe to events that it cares about. Another approach is to have the client subscribe to a query, and ask for updates every time the result of that query changes. Why didn’t we take that approach?

Let’s look back at the data we wanted to refetch for the story:

fragment StoryLikeData on Story { story { likers { count } likeSentence { text } } }

What events could trigger that a change to the data fetched in that fragment?

And that’s just the tip of the iceberg in terms of events; each of those events also becomes tricky when there are thousands of people subscribed, and millions of people who liked the post. Implementing live queries for this set of data proved to be immensely complicated.

When building event-based subscriptions, the problem of determining what should trigger an event is easy, since the event defines that explicitly. It also proved fairly straight-forward to implement atop existing message queue systems. For live queries, though, this appeared much harder. The value of our fields is determined by the result of their resolve function, and figuring out all of the things that could alter the result of that function was difficult. We could in theory have polled on the server to implement this, but that had efficiency and timeliness issues. Based on this, we decided to invest in the event-based subscription approach.

We’re actively building out the event-based subscription approach described above. We’ve built out live liking and commenting features on our iOS and Android apps using that approach, and are continuing to flesh out its functionality and API. While its current implementation at Facebook is coupled to Facebook’s infrastructure, we’re certainly looking forward to open sourcing our progress here as soon as we can.

Because our backend and schema don’t offer easy support for live queries, we don’t have any plans to develop them at Facebook. At the same time, it’s clear that there are backends and schemas for which live queries are feasible, and that they offer a lot of value in those situations. The discussion in the community on this topic has been fantastic, and we’re excited to see what kind of live query proposals emerge from it!

Subscriptions create a ton of possibilities for creating truly dynamic applications. We’re excited to continue developing GraphQL and Relay with the help of the community to enable these possibilities.

9/14/2015 by Lee Byron

When we built Facebook's mobile applications, we needed a data-fetching API powerful enough to describe all of Facebook, yet simple and easy to learn so product developers can focus on building things quickly. We developed GraphQL three years ago to fill this need. Today it powers hundreds of billions of API calls a day. This year we've begun the process of open-sourcing GraphQL by drafting a specification, releasing a reference implementation, and forming a community around it here at graphql.org.

Back in 2012, we began an effort to rebuild Facebook's native mobile applications.

At the time, our iOS and Android apps were thin wrappers around views of our mobile website. While this brought us close to a platonic ideal of the "write one, run anywhere" mobile application, in practice it pushed our mobile-webview apps beyond their limits. As Facebook's mobile apps became more complex, they suffered poor performance and frequently crashed.

As we transitioned to natively implemented models and views, we found ourselves for the first time needing an API data version of News Feed — which up until that point had only been delivered as HTML. We evaluated our options for delivering News Feed data to our mobile apps, including RESTful server resources and FQL tables (Facebook's SQL-like API). We were frustrated with the differences between the data we wanted to use in our apps and the server queries they required. We don't think of data in terms of resource URLs, secondary keys, or join tables; we think about it in terms of a graph of objects and the models we ultimately use in our apps like NSObjects or JSON.

There was also a considerable amount of code to write on both the server to prepare the data and on the client to parse it. This frustration inspired a few of us to start the project that ultimately became GraphQL. GraphQL was our opportunity to rethink mobile app data-fetching from the perspective of product designers and developers. It moved the focus of development to the client apps, where designers and developers spend their time and attention.

A GraphQL query is a string that is sent to a server to be interpreted and fulfilled, which then returns JSON back to the client.

Defines a data shape: The first thing you'll notice is that GraphQL queries mirror their response. This makes it easy to predict the shape of the data returned from a query, as well as to write a query if you know the data your app needs. More important, this makes GraphQL really easy to learn and use. GraphQL is unapologetically driven by the data requirements of products and of the designers and developers who build them.

Hierarchical: Another important aspect of GraphQL is its hierarchical nature. GraphQL naturally follows relationships between objects, where a RESTful service may require multiple round-trips (resource-intensive on mobile networks) or a complex join statement in SQL. This data hierarchy pairs well with graph-structured data stores and ultimately with the hierarchical user interfaces it's used within.

Strongly typed: Each level of a GraphQL query corresponds to a particular type, and each type describes a set of available fields. Similar to SQL, this allows GraphQL to provide descriptive error messages before executing a query. It also plays well with the strongly typed native environments of Obj-C and Java.

Protocol, not storage: Each GraphQL field on the server is backed by a function - code linking to your application layer. While we were building GraphQL to support News Feed, we already had a sophisticated feed ranking and storage model, along with existing databases and business logic. GraphQL had to leverage all this existing work to be useful, and so does not dictate or provide any backing storage. Instead, GraphQL takes advantage of your existing code by exposing your application layer, not your storage layer.

Introspective: A GraphQL server can be queried for the types it supports. This creates a powerful platform for tools and client software to build atop this information like code generation in statically typed languages, our application framework, Relay, or IDEs like GraphiQL (pictured below). GraphiQL helps developers learn and explore an API quickly without grepping the codebase or wrangling with cURL.

Version free: The shape of the returned data is determined entirely by the client's query, so servers become simpler and easy to generalize. When you're adding new product features, additional fields can be added to the server, leaving existing clients unaffected. When you're sunsetting older features, the corresponding server fields can be deprecated but continue to function. This gradual, backward-compatible process removes the need for an incrementing version number. We still support three years of released Facebook applications on a single version of our GraphQL API.

With GraphQL, we were able to build full-featured native News Feed on iOS in 2012, and on Android shortly after. Since then, GraphQL has become the primary way we build our mobile apps and the servers that power them. More than three years later, GraphQL powers almost all data-fetching in our mobile applications, serving millions of requests per second from nearly 1,000 shipped application versions.

When we built GraphQL in 2012 we had no idea how important it would become to how we build things at Facebook and didn't anticipate its value beyond Facebook. However earlier this year we announced Relay, our application framework for the web and React Native built atop GraphQL. The community excitement for Relay inspired us to revisit GraphQL to evaluate every detail, make improvements, fix inconsistencies, and write a specification describing GraphQL and how it works.

Two months ago, we made our progress public and released a working draft of the GraphQL spec and a reference implementation: GraphQL.js. Since then, a community has started to form around GraphQL, and versions of the GraphQL runtime are being built in many languages, including Go, Ruby, Scala, Java, .Net, and Python. We've also begun to share some of the tools we use internally, like GraphiQL, an in-browser IDE, documentation browser, and query runner. GraphQL has also seen production usage outside Facebook, in a project for the Financial Times by consultancy Red Badger.

“GraphQL makes orchestrating data fetching so much simpler and it pretty much functions as a perfect isolation point between the front end and the back end” — Viktor Charypar, software engineer at Red Badger

While GraphQL is an established part of building products at Facebook, its use beyond Facebook is just beginning. Try out GraphiQL and help provide feedback on our specification. We think GraphQL can greatly simplify data needs for both client product developers and server-side engineers, regardless of what languages you're using in either environment, and we're excited to continue to improve GraphQL, help a community grow around it, and see what we can build together.